The landscape of operating systems (OS) has undergone a remarkable transformation over the past six decades. From the rudimentary systems of the early days to the complex, user-friendly interfaces that power modern devices, the evolution of the OS has been a cornerstone of technological progress. Initially, operating systems bridged the gap between the raw hardware and user applications, translating binary operations into meaningful tasks. As technology matured, operating systems grew in sophistication, embracing features like multitasking and time-sharing, enabling them to manage a multitude of complex tasks simultaneously. The arrival of graphical user interfaces (GUIs) like Windows and macOS further revolutionized the user experience, making interaction intuitive and fostering a rich ecosystem of runtime libraries and developer tools.

The integration of Large Language Models (LLMs) marks a new chapter in this ongoing narrative. LLMs have infiltrated various industries, unlocking a world of possibilities with their remarkable capabilities. LLM-based intelligent agents are rapidly evolving, demonstrating human-like performance on a vast range of tasks. However, these agents remain in their infancy, and current techniques face hurdles that limit their effectiveness. These challenges include inefficient scheduling of agent requests over the LLM, integrating agents with diverse functionalities, and maintaining context during interactions with the LLM. Moreover, the rapid development and increasing complexity of LLM-based agents often lead to resource bottlenecks and suboptimal utilization.

Enter AIOS: The LLM Agent Operating System

This article introduces AIOS, a groundbreaking initiative that aims to address these limitations. AIOS reimagines the operating system by integrating LLMs as its central core, effectively imbuing it with a “soul” that empowers intelligent decision-making. The AIOS framework tackles key challenges by facilitating context switching across agents, optimizing resource allocation, providing essential tools for agent development, maintaining access control, and enabling concurrent execution of multiple agents.

Delving Deeper: Unveiling the Mechanisms and Architecture of AIOS

We will embark on a deep dive into the AIOS framework, exploring its unique mechanisms, its underlying methodology, and the innovative architecture that sets it apart. By comparing AIOS with existing state-of-the-art frameworks, we’ll illuminate its potential to revolutionize the way we interact with and utilize intelligent agents.

The Rise of Autonomous Agents: LLMs Take Center Stage

The field of Artificial Intelligence (AI) and Machine Learning (ML) is experiencing a paradigm shift. Having achieved remarkable success with Large Language Models (LLMs), the focus is now on developing intelligent agents capable of independent operation, decision-making, and task execution with minimal human intervention.

These autonomous AI agents are designed to be self-sufficient powerhouses. They can understand human instructions, process information, make informed choices, and take necessary actions to achieve a state of autonomy. The advent of LLMs has breathed new life into the development of these intelligent agents.

LLMs: The Powerhouse Behind Autonomous Agents

Current LLM frameworks, like DALL-E and GPT, have showcased impressive capabilities. They can not only grasp human instructions but also demonstrate reasoning, problem-solving skills, and the ability to interact with users and their surroundings. Building upon these powerful LLMs, LLM-based agents excel at fulfilling tasks across diverse environments. Imagine virtual assistants for everyday needs, or even more intricate systems that involve problem-solving, complex reasoning, planning, and execution – the possibilities are vast.

The Power and Challenges of LLM-based Agents: Enter AIOS

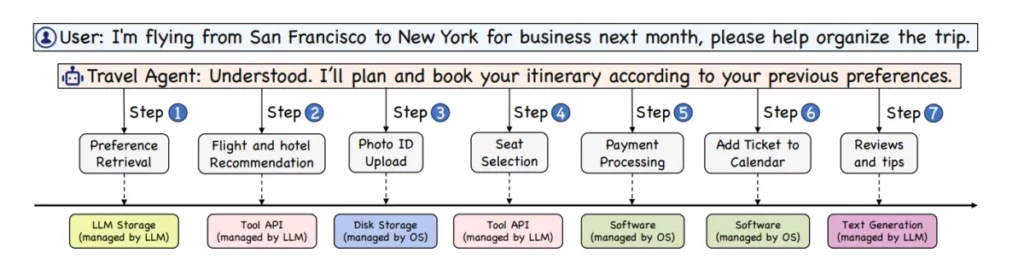

The above scenario exemplifies how an LLM-based autonomous agent can conquer real-world tasks. Imagine requesting a trip itinerary – the agent seamlessly decomposes it into actionable steps. Booking flights, securing hotels, and processing payments – all handled autonomously.

What truly differentiates these agents from traditional software is their decision-making prowess. They can reason and adapt while executing tasks. However, with the exponential rise of these intelligent agents, a strain is placed on the underlying Large Language Models (LLMs) and operating systems (OS).

Bottlenecks and Roadblocks: Challenges in LLM Agent Operations

One significant challenge lies in prioritizing and scheduling agent requests, especially when LLM resources are limited. Additionally, generating responses for lengthy contexts can be time-consuming for LLMs. This necessitates suspending ongoing generation, posing the problem of capturing the LLM’s current progress. To address this, “pause/resume” functionalities become crucial when response generation isn’t complete.

AIOS: A Novel Operating System for the LLM Era

AIOS, a groundbreaking LLM operating system, emerges as a solution to these challenges. It offers a unique approach by aggregating and isolating functionalities of LLMs and the OS.

The AIOS Framework: An LLM-Centric Kernel for Enhanced Management

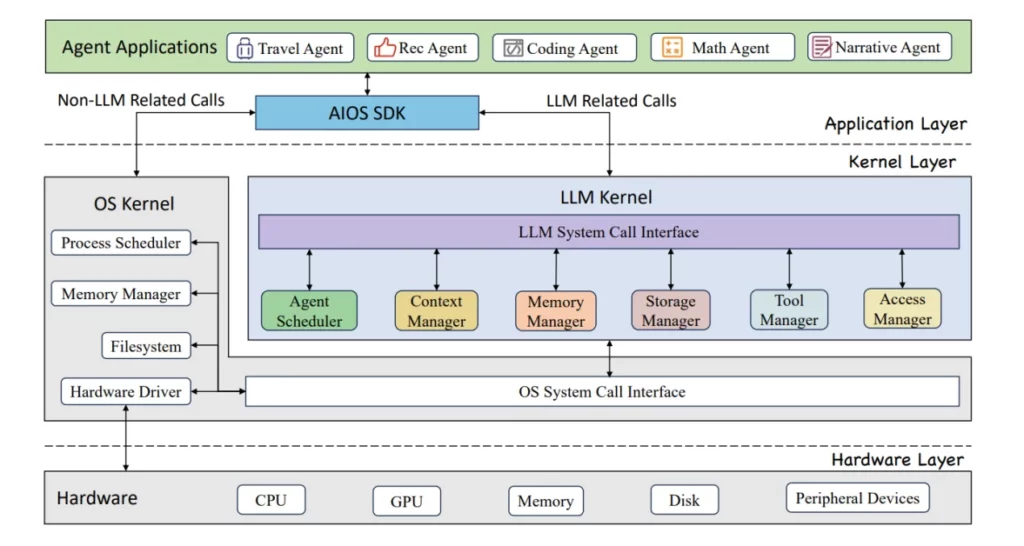

AIOS proposes an LLM-specific kernel design to prevent conflicts between LLM-related tasks and non-LLM tasks. This kernel essentially partitions the OS, segregating duties that oversee LLM agents, development toolkits, and their corresponding resources. This segregation empowers the LLM kernel to optimize the coordination and management of LLM-related activities.

In essence, AIOS tackles the growing complexities of LLM-based agents by providing a dedicated OS-level infrastructure, paving the way for a future where intelligent agents can operate seamlessly and efficiently.

AIOS Framework: A Deep Dive into Mechanisms and Architecture

AIOS, the innovative LLM operating system, orchestrates the seamless operation of intelligent agents. Let’s delve into the key mechanisms that power its functionality and explore its layered architecture.

Mechanisms for Optimized Agent Operations

- Agent Scheduler: This vital component ensures efficient utilization of the LLM by prioritizing and scheduling agent requests effectively. Imagine a busy traffic controller – the agent scheduler ensures a smooth flow of tasks without bottlenecks.

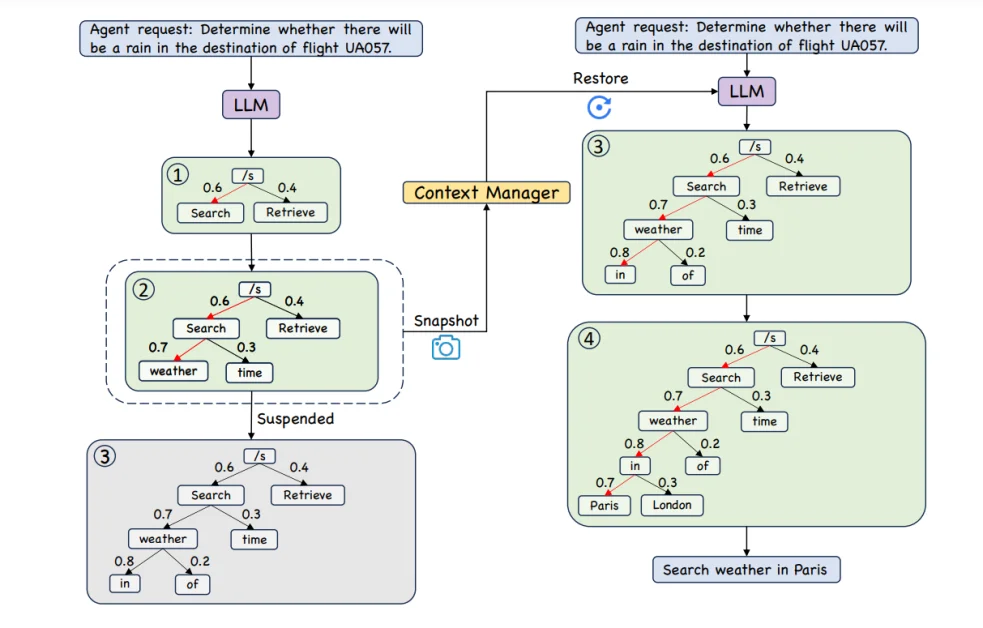

- Context Manager: Preserving progress is crucial. The context manager facilitates snapshots and restoration of the LLM’s intermediate generation state, akin to bookmarking a long read. Additionally, it manages the context window within the LLM, ensuring focus on relevant information for optimal performance.

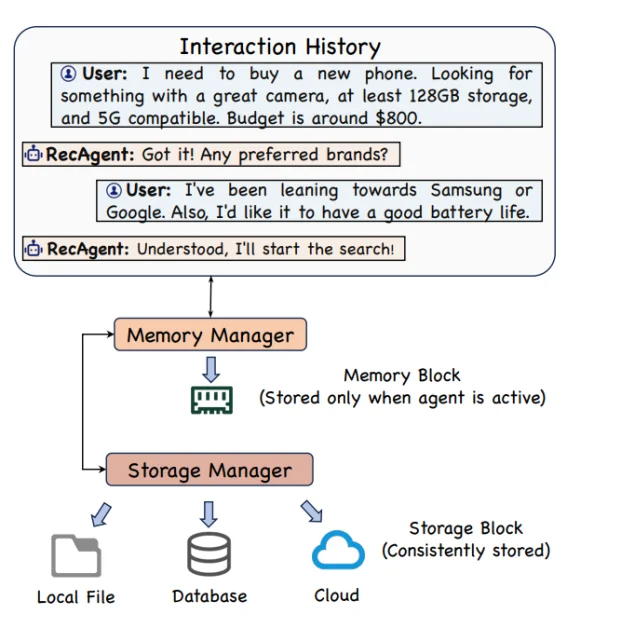

- Memory Manager: Each agent interacts with the system. The memory manager provides short-term memory for the interaction log, storing the ongoing conversation history for each agent.

- Storage Manager: Long-term storage is essential. The storage manager ensures the interaction logs of agents are persisted for future retrieval, similar to archiving past conversations.

- Tool Manager: The external world offers valuable tools. The tool manager facilitates the invocation of external API tools by agents, allowing them to leverage external resources when needed.

- Access Manager: Security is paramount. The access manager enforces privacy and access control policies, ensuring only authorized agents can access specific resources. This safeguard prevents unauthorized interactions and protects sensitive information.

Layered Architecture for Seamless Interaction

AIOS boasts a meticulously designed layered architecture that streamlines interaction and simplifies development.

- Application Layer: This layer empowers developers to create and deploy intelligent agents like math or travel agents. Here, the AIOS Software Development Kit (AIOS SDK) provides a higher level of abstraction for system calls, streamlining the development process. Imagine a pre-built toolbox – the AIOS SDK offers a rich set of tools that simplify agent development by abstracting away complex system functions. Developers can focus on core functionalities and logic, resulting in faster and more efficient agent creation.

- Kernel Layer: This layer acts as the system’s core, further divided into two key components:

- LLM Kernel: This kernel caters to the unique needs of LLM-specific operations. It handles tasks like agent scheduling and context management, crucial for effective LLM utilization. Think of it as a specialized control center dedicated to optimizing LLM performance. Notably, the AIOS framework focuses on enhancing the LLM kernel without significantly altering the existing operating system kernel.

- OS Kernel: This component manages non-LLM related tasks, building upon the existing functionalities of the operating system. Within the kernel layer, several key modules work in concert:

- Agent Scheduler, Memory Manager, Context Manager, Storage Manager, Access Manager, Tool Manager: These components, akin to a well-coordinated team, address the diverse execution needs of agent applications, ensuring efficient agent operation and management within the AIOS framework.

- Hardware Layer: This layer comprises the physical components of the system – the CPU, GPU, memory, disk, and peripheral devices. Importantly, the LLM kernel interacts with the hardware indirectly through the operating system’s system calls. This layered approach provides a security and abstraction benefit. The LLM kernel can leverage hardware resources without managing them directly, ensuring system integrity and efficiency.

AIOS in Action: Mechanisms for Seamless Agent Operation

We’ve explored the core mechanisms that power AIOS. Now, let’s delve deeper into how they translate to real-world functionality.

Prioritizing Tasks and Minimizing Wait Times

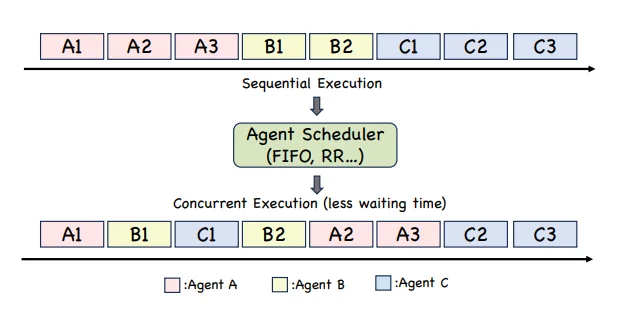

Unlike traditional sequential execution, where tasks are processed one after another, the AIOS agent scheduler employs a more sophisticated approach. It leverages strategies like Round Robin and First In First Out (FIFO) to manage agent requests efficiently. Imagine a conductor orchestrating an orchestra – the scheduler ensures tasks are handled strategically, minimizing wait times for agents further down the queue.

Context Management: Preserving Progress and Focus

The context manager plays a crucial role in maintaining the LLM’s focus and enabling progress resumption. It encompasses two key functionalities:

- Context Snapshot and Restoration: This feature tackles situations where the scheduler suspends agent requests. Similar to bookmarking a long webpage, the context manager captures the LLM’s current state, allowing it to resume seamlessly when processing restarts.

- Context Window Management: The context manager ensures the LLM focuses on relevant information for optimal performance. Imagine reading a vast document – the context window narrows down the focus to the specific section being processed, maximizing efficiency.

Memory Management: Balancing Short-Term Needs

Each agent interacts with the system, generating data. The memory manager handles this short-term memory aspect. It ensures data is readily available for an active agent, whether it’s currently executing or waiting its turn in the queue. Think of it as a workspace for each agent, storing relevant information for easy access during processing.

Long-Term Storage: Safeguarding Information

The storage manager takes over when long-term data preservation is required. It ensures information exceeding an individual agent’s lifespan is stored for future retrieval. Imagine an archive – the storage manager utilizes various durable mediums like cloud storage, databases, and local files to guarantee data availability and integrity.

Tool Integration: Expanding Capabilities

The tool manager empowers agents by facilitating access to a diverse range of external API tools. The following table (insert table here, if available) provides a glimpse into how the tool manager integrates commonly used tools from various resources, categorized for easy reference. This functionality allows agents to leverage external functionalities, enriching their capabilities.

Access Control: Ensuring Security and Transparency

Security is paramount. The access manager enforces this by assigning a dedicated privilege group to each agent. This group dictates the resources accessible to the agent, preventing unauthorized access and safeguarding sensitive information. Additionally, the access manager maintains audit logs, enhancing system transparency by recording access attempts.

AIOS: Measuring Success – Efficiency and Consistency

Evaluating the AIOS framework involved two key research questions:

- Scheduling Efficiency: Does AIOS scheduling improve the balance between waiting time and turnaround time for agents?

- Response Consistency: Do LLMs maintain consistent responses to agent requests even after agent suspension?

Ensuring Consistent Responses after Suspension

To address consistency, developers ran each of the three agents (BERT, BLEU, etc.) individually and then in parallel. They captured outputs at each stage. The results, presented in a table (not shown here), revealed a perfect match (BERT and BLEU scores of 1.0) between single-agent and multi-agent outputs. This confirms that AIOS effectively manages context switching without compromising response consistency.

Prioritizing Tasks and Minimizing Wait Times

To assess scheduling efficiency, AIOS with FIFO (First In, First Out) scheduling was compared to a non-scheduled approach where agents run concurrently in a predefined order (Math, Narrating, then Recommendation agent). Waiting time and turnaround time were measured as the average for all agent requests.

The non-scheduled approach favored earlier agents in the sequence, leading to significantly longer waiting and turnaround times for those later in line. AIOS scheduling, on the other hand, effectively regulated both waiting and turnaround times across all agents. This demonstrates AIOS’s ability to optimize resource allocation and prioritize tasks efficiently.

AIOS: A Foundation for the Future of Intelligent Agents

This article explored AIOS, a groundbreaking LLM operating system designed to integrate large language models as the core (“brain”) of the system. AIOS empowers context switching, resource optimization, tool services for agents, access control, and concurrent agent execution. The AIOS architecture holds immense potential for facilitating the development and deployment of robust LLM-based agents, paving the way for a more effective, cohesive, and intelligent AI ecosystem.

In essence, AIOS tackles the challenges associated with managing LLM-based agents, fostering a future where these intelligent entities can operate seamlessly and efficiently.