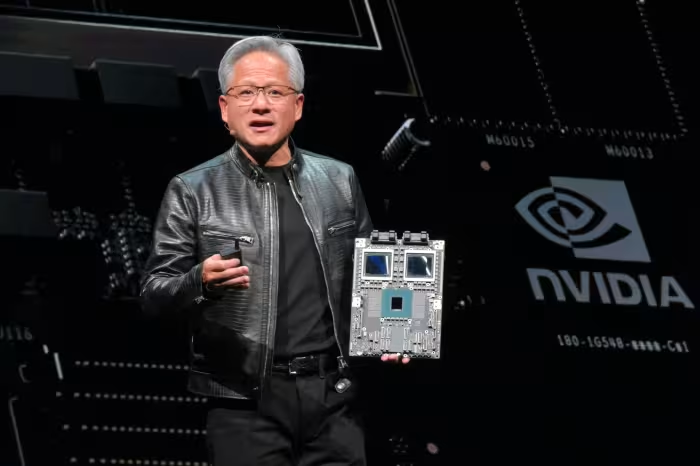

At the recent Computex conference in Taipei, NVIDIA CEO Jensen Huang offered a glimpse into the future of AI computing. The spotlight shone brightly on two key announcements: the Blackwell Ultra chip, slated for release in 2025, and the even more intriguing Rubin AI platform, scheduled for a 2026 launch.

Rubin: A Leap Forward in AI Processing Power

The Rubin platform signifies a watershed moment for NVIDIA’s AI ambitions. As the successor to the highly anticipated Blackwell architecture, Rubin promises a significant leap forward in processing capabilities. Huang aptly described the current landscape as one of “computation inflation,” highlighting the ever-growing demand for faster, more efficient data processing. Here’s where Rubin steps in, boasting a staggering 98% cost reduction and 97% energy consumption reduction compared to previous generations. These figures position NVIDIA as a frontrunner in the AI chip race, offering groundbreaking efficiency and cost savings.

Lifting the Veil on Rubin’s Architecture

While specifics remain under wraps, Huang unveiled some intriguing hints about the Rubin platform. It will feature next-generation GPUs for unparalleled processing power, along with a central processor codenamed “Vera.” Critically, the platform will also incorporate HBM4, the next iteration of high-bandwidth memory. This component has become a bottleneck in AI accelerator production due to skyrocketing demand. Leading supplier SK Hynix Inc. is already facing significant backlogs, underscoring the fierce competition for this essential element.

A Race for AI Supremacy: NVIDIA vs. AMD

NVIDIA’s commitment to an annual release cycle for its AI chips underscores the intensifying competition within the AI chip market. As NVIDIA strives to maintain its leadership position, other industry giants are making bold moves. During her opening keynote at Computex, AMD Chair and CEO Lisa Su showcased the growing momentum of the AMD Instinct accelerator family. She unveiled a multi-year roadmap with an annual cadence of cutting-edge AI performance and memory advancements.

AMD’s roadmap kicks off with the AMD Instinct MI325X accelerator, boasting industry-leading memory capacity and bandwidth, slated for release in Q4 2024. The company also previewed the 5th Gen AMD EPYC processors, codenamed “Turin,” powered by the “Zen 5” core and expected to arrive in the second half of 2024. Looking further ahead, AMD plans to release the AMD Instinct MI400 series in 2026, built on the AMD CDNA “Next” architecture, promising substantial performance and efficiency gains for AI training and inference.

Profound Implications and Challenges Ahead

The introduction of the Rubin platform, coupled with NVIDIA’s commitment to annual AI accelerator updates, has far-reaching implications for the AI industry. This accelerated pace of innovation promises more efficient and cost-effective AI solutions, fueling advancements across diverse sectors.

However, the path forward is not without its challenges. The aforementioned high demand for HBM4 memory and the supply constraints posed by SK Hynix could potentially impact the production schedule and availability of the Rubin platform. Striking a delicate balance between performance, efficiency, and cost will be crucial for NVIDIA. The platform needs to remain accessible and viable for a broad range of customers. Additionally, seamless integration with existing systems is paramount to facilitate adoption and minimize disruption for users.

As the Rubin platform paves the way for a new era of accelerated AI innovation, businesses and researchers alike must remain vigilant and prepared to leverage these advancements. By harnessing the power of Rubin, organizations across industries can unlock unprecedented efficiencies and gain a significant competitive edge.